AMD × OpenAI: The Six‑Gigawatt AI Compute Buildout

A visual digest of the interview with AMD CEO Lisa Su and OpenAI co‑founder Greg Brockman on their multi‑location, multi‑year plan to deploy up to 6 GW of AI compute focused on inference.

Why This Matters

OpenAI reports explosive product demand—~800M weekly active users—yet the pace of launching new features is constrained by a global “compute desert.” The partnership aims to unlock inference capacity so models can serve billions of requests reliably and efficiently.

Greg Brockman: “We cannot launch features or new products simply because of lack of computational power… We’re trying to build as much as possible as quickly as possible.”

What’s Being Built

- Phase‑1 deployment of ~1 GW in the second half of 2026.

- Scale up toward 6 GW across multiple locations and providers.

- Stack optimized for inference using AMD’s Instinct MI450 silicon.

- Co‑engineering on hardware, software (ROCm), and supply chain.

Strategic Pillars

Energy

Powering AI

Scaling data centers requires substantial power. Nuclear and other reliable sources are emphasized to bring capacity online faster.

Manufacturing

US‑first

AMD prioritizes building in the United States while leveraging global partners like TSMC for advanced nodes and packaging.

Cloud + On‑Prem

Multi‑location

Deployments will span OpenAI‑operated data centers and cloud providers (e.g., Oracle), accelerating time‑to‑capacity.

Financial Flywheel

More compute → More AI products → More revenue → More compute. AMD benefits through accretive chip sales, while OpenAI funds expansion via fast‑growing AI revenue streams and creative financing.

- OpenAI: exploring equity, debt, and structured financing.

- AMD: revenue & earnings lift as deployments scale.

Why Inference?

Training is episodic; inference is continuous. Serving billions of model calls demands efficiency, latency control, and cost discipline. MI450 targets memory bandwidth, perf‑per‑watt, and scalable chiplet design to reduce total cost of ownership for always‑on workloads.

Lisa Su: “This is the largest deployment we’ve announced. It’s an all‑in commitment… with engineering across hardware, software, and supply chain.”

Milestone Timeline

Now → 2026

Co‑design, ROCm optimization, supply‑chain locking, site selection, and energy commitments.

H2 2026

Phase‑1: ~1 GW inference capacity comes online using AMD Instinct MI450.

Post‑2026

Multi‑site expansion toward 6 GW, onboarding additional providers and regions.

Ecosystem Effects

- Energy build‑outs: accelerates grid investments; nuclear gains relevance.

- Semis & packaging: sustained demand for HBM, advanced 2.5D/3D integration.

- Geopolitics: compute becomes a national‑security resource; US leadership prioritized.

- Cloud: multi‑provider strategy reduces time‑to‑capacity and vendor risk.

Key Quotes

- “Compute is the foundation for all of the intelligence we can get from AI.” — Lisa Su

- “We just want compute… as much as possible.” — Greg Brockman

- “If you can have 10× as much AI power behind you, you’ll probably be 10× more productive.” — Greg Brockman

Success Metrics to Watch

| Metric | Why It Matters |

|---|---|

| Inference $/token | Primary driver of product margins at scale. |

| Latency (p95/p99) | Reliability & user experience for real‑time use cases. |

| Energy per request | Opex & sustainability for always‑on workloads. |

| HBM availability | Critical for large‑context models & throughput. |

| ROCm adoption | Developer velocity beyond single‑vendor ecosystems. |

Risk Map

- Supply chain: Foundry and HBM constraints.

- Power: Siting delays; grid interconnect timelines.

- Software: Porting & optimization debt for diverse workloads.

- Financing: Pace of revenue vs. capex cadence.

- Competition: Rapid iteration from incumbents across training & inference.

Bottom Line

This alliance is an industrial‑scale bet that compute scarcity is the gating factor for AI progress. By coordinating silicon, software, power, and financing, AMD and OpenAI aim to turn a compute desert into a fertile AI economy—starting with ~1 GW in H2 2026 and marching toward 6 GW across multiple locations and providers.

Shareable badges

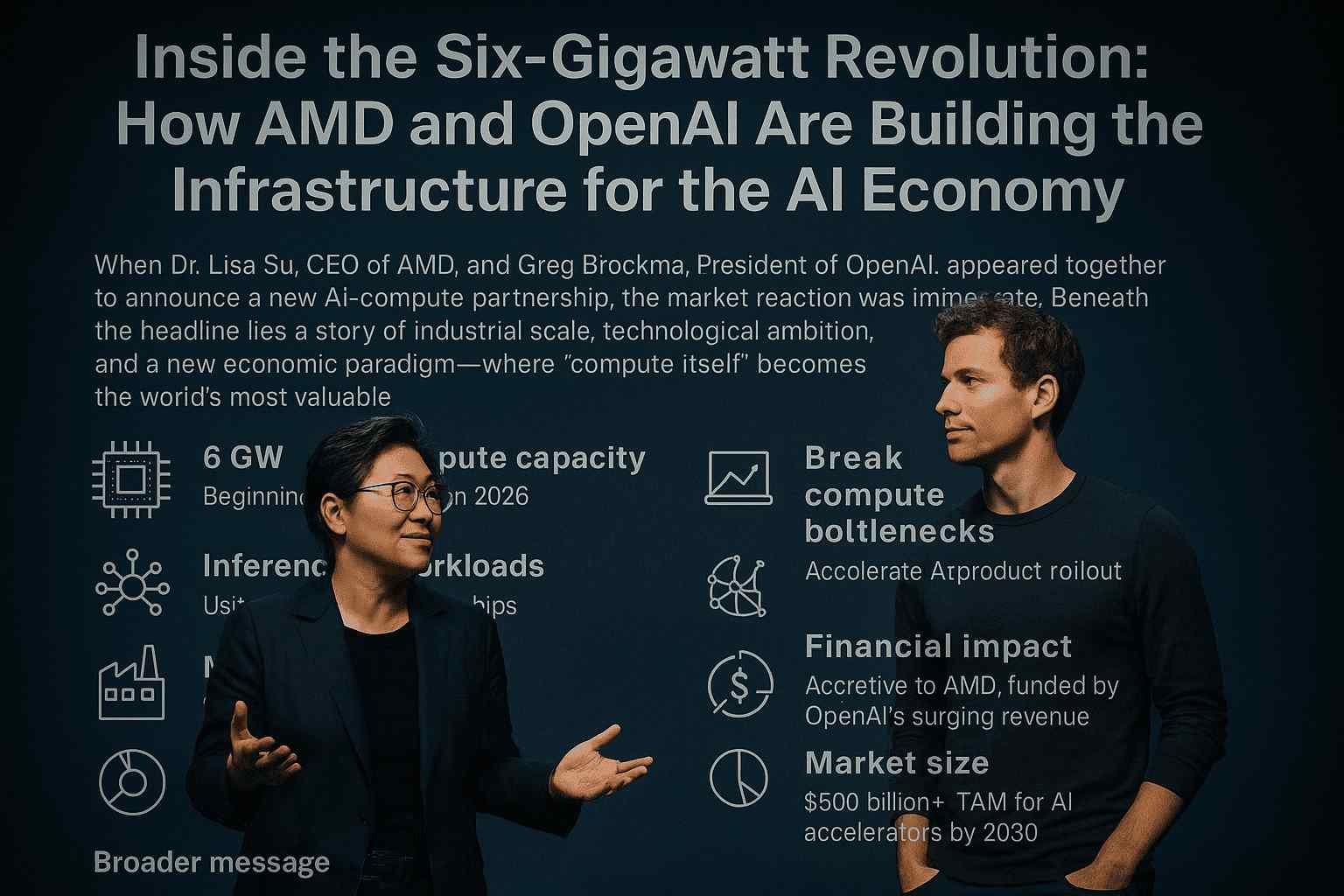

Inside the Six-Gigawatt Revolution: How AMD and OpenAI Are Building the Infrastructure for the AI Economy

When Dr. Lisa Su, CEO of AMD, and Greg Brockman, President of OpenAI, appeared together to announce a new multi-gigawatt AI-compute partnership, the market reaction was immediate.

AMD shares spiked, analysts scrambled to update their spreadsheets, and the tech world paused to absorb what this deal really means.

Because beneath the headline lies a story of industrial scale, technological ambition, and a new economic paradigm—where compute itself becomes the world’s most valuable commodity.

1. The Birth of a Compute Super-Alliance

“Compute is the foundation for all of the intelligence we can get from AI,” Lisa Su said.

“And we are a compute provider.”

Those two lines summarize AMD’s philosophy perfectly.

After years of battling Intel in CPUs and Nvidia in GPUs, AMD has spent the past decade quietly building an alternative compute stack for the AI age—its Instinct accelerators, ROCm software, and upcoming MI450 chips.

Now that the groundwork is paying off.

This partnership with OpenAI isn’t just a sale—it’s a strategic alignment around the single biggest bottleneck in artificial intelligence today: lack of computational power.

OpenAI has 800 million weekly active users across products like ChatGPT and DALL·E, and demand keeps exploding.

As Brockman put it bluntly:

“We cannot launch features or new products simply because of lack of computational power.”

That’s why the first phase of this deal begins with 1 gigawatt of new compute capacity—enough to power roughly one million high-end GPUs—expanding to 6 gigawatts over time.

If completed, it will rank among the largest AI infrastructure projects ever attempted.

2. From Chatbots to the AI Economy

To understand why six gigawatts even makes sense, consider how the AI economy has evolved.

In 2022, generative AI was a curiosity.

By 2025, it became a necessity—embedded in search engines, design tools, customer support, and enterprise automation.

Every new model—GPT-5, Codex Pro, Sora, Whisper 3—demands exponentially more processing power than the last.

Brockman calls this reality a “compute desert”—a world where innovation is throttled not by creativity, but by hardware scarcity.

“We’re heading to a world where so much of the economy is going to be lifted up and driven by progress in AI,” he explained.

“We’re trying to build as much as possible, as quickly as possible.”

This isn’t hyperbole.

Goldman Sachs projects that AI could add $7 trillion to global GDP over the next decade.

But to get there, companies like OpenAI, AMD, Nvidia, Oracle, and others must literally build the physical backbone of intelligence.

3. Why Inference Is the Next Frontier

Most people think AI infrastructure is about training massive models.

But the real long-term challenge is inference—the act of running those models at scale for billions of users, every second of every day.

Training might happen once; inference happens forever.

Lisa Su emphasized that AMD’s MI450 series is optimized for exactly this: high-efficiency inference at massive throughput, with better performance-per-watt than earlier generations.

OpenAI’s first one-gigawatt deployment in 2026 will use these chips.

“This is certainly the largest deployment we have announced by far,” Su confirmed.

“These types of partnerships take years to build. This is an all-in commitment.”

Inference computing is where AMD can finally break Nvidia’s near-monopoly.

While Nvidia dominates training workloads with its CUDA ecosystem, inference workloads reward efficiency, cost, and flexibility—areas where AMD’s open architecture and ROCm software shine.

4. The Engineering of Scale

Building six gigawatts of compute is not a press-release milestone—it’s an engineering marathon.

Each gigawatt could power several hyperscale data-center campuses.

Su and Brockman confirmed the build will occur across multiple locations and cloud providers, including AMD’s partners like Oracle Cloud.

“This requires the entire supply chain to wake up,” said Brockman.

“From energy production—nuclear will be critical—to manufacturing and cloud deployment.”

Power, chips, servers, and cooling—every link must scale together.

And unlike past chip booms, this one collides with global energy constraints and geopolitical priorities.

That’s why both executives stressed U.S. manufacturing leadership.

AMD continues to work closely with TSMC, but Su noted that “we’re absolutely prioritizing building in the United States because that’s super important.”

It aligns with the CHIPS Act incentives and the national-security logic that compute is now a strategic resource.

5. Financing the AI Buildout

When you hear “six gigawatts,” you also hear “billions of dollars.”

So how will OpenAI fund this astronomical expansion?

Brockman’s answer: through revenue velocity.

“Our revenue is growing faster than almost any product in history,” he said.

“At the end of the day, the reason this compute power is so worthwhile is because the revenue will be there.”

That doesn’t mean there won’t be financial engineering involved.

OpenAI is exploring equity, debt, and creative financing structures, possibly backed by energy partnerships or compute-as-a-service models.

AMD, meanwhile, benefits immediately.

Each chip OpenAI buys boosts AMD’s earnings.

“This deal is a win for AMD, for OpenAI, and for our shareholders,” Su explained.

“As OpenAI buys chips, our revenue goes up, our earnings go up—it’s accretive from day one.”

In essence, AMD and OpenAI have created a mutually reinforcing growth loop—compute demand drives chip sales, which fund more compute, which drives more AI products, which in turn create more demand.

A virtuous cycle at an industrial scale.

6. The Supply-Chain Challenge

Both leaders acknowledge that the biggest risks aren’t technical—they’re logistical.

Chip manufacturing, server assembly, and power-grid expansion must all align.

The U.S. semiconductor ecosystem has made progress, but wafer capacity remains tight, and high-bandwidth memory (HBM) components are in chronic shortage.

AMD’s close partnership with TSMC gives it priority access to leading-edge nodes, but Su confirmed that her company is meticulously managing every part of the supply chain.

“We’re ensuring the supply chain, the hardware, the software—all of those elements are set up and ready to deliver on this massive commitment.”

OpenAI, for its part, is coordinating with cloud providers, energy firms, and even nuclear projects to secure the necessary infrastructure.

“We think compute is going to become a national-security resource,” Brockman added.

“Every country will need computational power.”

That sentiment echoes broader U.S. policy: compute sovereignty is the new oil independence.

7. The Technology Inside AMD’s MI450

Though still unreleased, AMD’s MI450 series is already drawing attention.

It represents the culmination of years of R&D into memory-coherent interconnects, advanced packaging, and AI-optimized instructions.

Compared to its predecessor, the MI300, the MI450 promises:

- Higher memory bandwidth for large-language-model inference.

- Improved energy efficiency per operation.

- Scalable chiplet design allowing for better yields and lower costs.

- Full ROCm 6 compatibility, making it easier for OpenAI to integrate.

Brockman praised the chip directly:

“The MI450 looks like it’s going to be a really incredible chip. Different workloads require different balances of memory and compute. Having that diversity accelerates what we can do.”

That last point matters: AI workloads are no longer monolithic.

Some require massive GPU clusters for transformer models, others rely on CPU–GPU hybrids for retrieval or reasoning tasks.

AMD’s flexible architecture allows OpenAI to mix and match configurations across data centers.

8. The Path to the First Gigawatt

The first operational milestone—one gigawatt of compute—is scheduled for the second half of 2026.

Su described it as both a technical and commercial milestone:

“The first gigawatt of deployment is super important. We’re going to start that in the second half of next year and build on from there.”

Beyond raw compute, the roadmap includes adoption milestones (getting models to run efficiently on AMD hardware) and proliferation milestones (making those capabilities available across multiple cloud environments).

This incremental ramp mirrors how cloud infrastructure historically scaled—AWS didn’t appear overnight; it grew data-center by data-center.

Similarly, OpenAI’s global compute footprint will expand through modular deployments tied to energy availability and chip production schedules.

9. A New Model of Partnership: Tech Meets Finance

A striking aspect of this announcement is how tightly financial incentives are woven into technological progress.

Traditionally, chipmakers sold hardware; software firms built on top.

Now, the lines are blurring.

AI companies are co-funding hardware buildouts, chipmakers are offering equity or revenue-sharing models, and both sides are synchronizing product roadmaps years in advance.

“You’re seeing more AI users and chip designers becoming financially tied to each other,” the interviewer noted.

“Is this the future?”

Brockman didn’t hesitate:

“We’re heading to a world where the economy itself will be limited or accelerated by computational power. The whole industry has to rise to meet the occasion.”

In that world, access to compute becomes the new competitive moat.

Companies, nations, and even individuals with better AI horsepower will simply be more productive.

Or as Brockman put it:

“If you have ten times as much AI power behind you, you’ll probably be ten times more productive.”

10. The Macro View: A $500 Billion Market in Motion

For AMD, this partnership validates its long-term bet on heterogeneous compute.

Su estimated the total addressable market (TAM) for AI accelerators at over $500 billion in the next few years.

She now admits that might be conservative.

“There’s so much need for compute,” she said.

“You’re going to see more players coming in. But this is a big validation of our technology and capability.”

AMD’s advantage lies in diversification.

While Nvidia continues to dominate training, AMD is carving its niche in inference and hybrid computing.

And because the company doesn’t own its own fabs, it can flexibly scale production with partners across regions.

Investors are noticing.

Since the announcement, analysts have raised AMD’s long-term earnings forecasts, betting that the MI450 family could finally establish AMD as the second pillar of global AI infrastructure.

11. Why This Matters Beyond AMD and OpenAI

The implications ripple far beyond these two companies.

- Energy: AI’s power hunger will accelerate investment in clean and nuclear energy sources.

- Manufacturing: Global foundries will face unprecedented demand for advanced packaging and HBM memory.

- Geopolitics: Nations will treat compute sovereignty as a strategic policy.

- Finance: New asset classes—compute credits and AI infrastructure funds—may emerge.

- Society: Access to AI tools will increasingly depend on who controls the underlying compute.

In short, the “six-gigawatt deal” is not just a tech story—it’s a preview of how the 2030s economy will function.

12. The Human Element: Vision and Trust

Behind every trillion-dollar transformation are individuals willing to bet their reputations on the future.

Lisa Su, an engineer by training, has always emphasized execution over hype.

Her calm confidence throughout the interview reflects AMD’s culture: deliver, iterate, and scale.

“These partnerships take years to get comfortable with the idea that we’ll go all-in together,” she said.

“We’re working together on hardware, software, and supply chain to deliver on this massive commitment.”

Brockman echoed that trust:

“This is an industry-wide effort. We’re working with everyone to get as much compute power online as quickly as we can.”

In a market often defined by rivalry—AMD vs Nvidia, OpenAI vs Anthropic—this collaboration feels refreshingly cooperative.

Because the challenge ahead is bigger than any one company.

13. Looking Ahead: The AI Industrial Age

If the 20th century was defined by oil and electricity, the 21st will be defined by computing.

The AMD–OpenAI partnership is an early blueprint for that transformation:

- massive investment in AI infrastructure,

- multi-party coordination across energy, manufacturing, and cloud sectors,

- and a recognition that intelligence itself now depends on physical resources.

When historians look back, this might be remembered as the moment the AI industrial age truly began—when compute became the new currency of progress.

Quick Summary

- AMD + OpenAI Partnership: 6 GW of AI compute capacity, beginning with 1 GW in 2026.

- Focus Area: Inference workloads using AMD’s MI450 chips.

- Strategic Goal: Break compute bottlenecks and accelerate AI product rollout.

- Manufacturing: Priority on U.S. production, tight collaboration with TSMC.

- Financial Impact: Accretive to AMD, funded by OpenAI’s surging revenue.

- Global Implication: Compute emerges as a national-security and economic resource.

- Market Size: $500 billion+ TAM for AI accelerators by 2030.

- Broader Message: The era of AI-powered economies has officially begun.

Conclusion: The Virtuous Cycle of Intelligence

At its heart, this partnership represents the virtuous cycle of intelligence—where progress in computation fuels smarter models, which fuel smarter tools, which fuel a smarter economy.

AMD gains scale.

OpenAI gains power.

And humanity gains access to the most transformative technology since electricity.

As Lisa Su said near the end:

“We love the work because OpenAI is the ultimate power user of our chips—and they test us in very good ways.”

That testing will soon happen on a global scale.

And as the first gigawatt of AMD silicon lights up in 2026, we’ll be witnessing not just another data center, but the birth of the infrastructure that will power the intelligence of the world.

“The Six-Gigawatt Alliance: How AMD and OpenAI Are Powering the Next Era of Artificial Intelligence”

AMD, OpenAI, AI Compute, Lisa Su, Greg Brockman, MI450, Artificial Intelligence, Inference, Data Centers, AI Economy

#AMD #OpenAI #ArtificialIntelligence #AICompute #TechRevolution #LisaSu #GregBrockman #DataCenters #AIInfrastructure #PyUncut